Not everything that counts can be counted. And not everything that can be counted counts –William Bruce Cameron

Do vaccination campaigns increase immunization rates in young children? Do home-visiting programs for new mothers increase exclusive breastfeeding? Studies designed to answer these questions are known as health impact evaluations and are key for global health policy-making.

Counterfactual statistical approaches have long been hailed as the gold standard for health impact evaluations. These approaches are modelled around the statistical paradigm of randomized control trials. In public health this usually means that certain clusters of people (schools, health facilities or villages) are randomly allocated to receive the intervention while others don’t. Primary health outcomes (eg immunization or breastfeeding rates) are then compared, often in before-after comparisons, to quantify the interventions’ impact.

However, it is now amply recognized in public health that these counterfactual approaches have many limitations. First of all they are difficult to implement: it is often not feasible to only allocate the intervention to some people (or groups of people). Perhaps more importantly, counterfactual approaches do not provide the most useful answers for policy-planning. They provide very context-specific information about an intervention: did the intervention work then and there. But why did these changes occur (or not)? With counterfactual approaches we cannot answer this question – we don’t know what’s in the ‘black box’, what mechanisms were set in motion. This is especially important when no changes can be seen – why is that the case? What should be done differently next time?

Mixed-methods evaluations which integrate statistical methods with qualitative research methods are a very powerful way to overcome this problem: they allow to peak into the black box. Quantitative methods for evaluations include surveys and analysis of routine data. The most commonly used qualitative methods are focus group discussions, in-depth interviews and structured observations. Quantitative methods determine whether there was an effect and the size of the effect – while qualitative methods help to explore how, why and for whom the intervention worked. Thus mixed-methods evaluations can provide a comprehensive understanding of the extent and reasons for change (or lack thereof) in a health intervention.

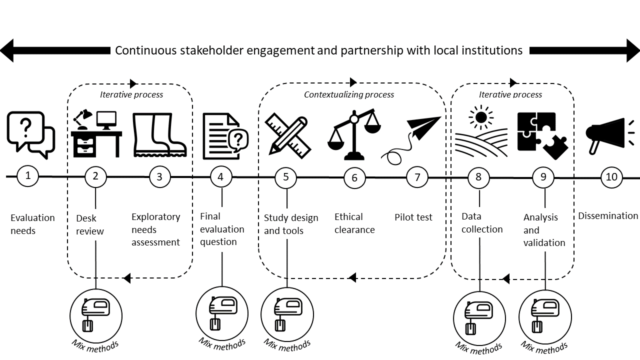

To ensure the success of a mixed-methods impact evaluation, it is important to mix methods from the start of the evaluation and all throughout to ensure that methods optimally complement each other. In Figure 1 and below we describe the approach developed by evaluators from KIT Royal Tropical Institute, building upon many years’ experience evaluating health interventions for a range of bilateral and multi-lateral donors. An important premise is that a team of experienced evaluators with method-specific expertise should be put together. We believe it is imperative to include local researchers, to ensure that the evaluation questions, methods, results and dissemination are adequately contextualized.

Step 1: Evaluation needs

While many evaluations are based on terms of reference developed by stakeholders who will use the evaluation results, it is critical to take time at the onset of an evaluation to fully understand and further define the aim and scope of the evaluation. This starts with detailing the change that an intervention aims to achieve and the intended pathways – ie the intervention’s theory of change.

Step 2: Desk review

Based on the aims and scope identified, the evaluation can be further developed by reviewing all project data and documents. At this stage it is important to understand in what life-cycle stage the project currently is; if there is any scope for randomization; what data has been generated by the intervention and whether these can be meaningfully used for the evaluation.

Mixing moment: During this stage it is important to take stock of both qualitative and quantitative data available and to align these in order to identify information gaps.

Step 3: Exploratory needs assessment

In some cases field visits may be needed to understand the reality on the ground. This is an ideal moment to gauge various stakeholders’ perspectives on the intervention, including beneficiaries. These consultations should provide insights into the actual pathways of change, in order to better understand the intervention’s theory of change. For this it is important to ask stakeholders what changes they expected, which have taken place, and what were the driving factors. This step can also be a way to get buy-in and support for the evaluation activities. Several iterations may be necessary: during an exploratory needs assessment one may need to go back to the data sources identified earlier and re-assess them in the light of new leads.

Step 4: Final evaluation questions

All this preparatory work enables to finalise the evaluation objectives and questions, imbedded within an evaluation framework and a theory of change.

Mixing moment: The final evaluation questions should enable a comprehensive understanding of the intervention. There is a risk that various sub-questions correspond to a given methodology (quantitative or qualitative) causing parallel yet unconnected streams of research. This can be avoided by reflecting on the qualitative and quantitative information needs for each sub-question in light of the theory of change.

Step 5: Study design and tools

Research tools (e.g. questionnaires or qualitative data collection guides) should be as valid and reliable as possible, and field procedures should be clearly described.

Mixing moment: Tools for quantitative and qualitative data collection should be complimentary and ensure that all evaluation questions can be answered. For quantitative data collection, tools should preferably build on existing validated tools. For qualitative data collection, guides usually have to be designed from scratch, in order to be fully relevant in the given context.

Step 6. Ethical review

Most health related evaluations these days require ethical review. It is important to check requirements of the local research ethics committee.

Step 7: Pilot test

Evaluation tools should be tested in a small number of respondents to test their comprehensibility, acceptability and feasibility. This is key to ensure that tools are adequately contextualized.

Step 8: Field data collection

When a tool is considered satisfactory after one or more rounds of pilot testing, it is then applied to the target population. Depending on the design (e.g. for before/after comparisons), data collection can be conducted at multiple time-points.

Mixing moment: While qualitative and quantitative data are often collected in parallel, they can complement each other better in a sequential approach. Qualitative data collection could take place at start of an evaluation to explore a given issue, and then based on the qualitative results, quantitative data collection may be adapted to measure the effect size and distribution of those issues. The reverse can also be done: first the quantitative data collection, then qualitative to explain the quantitative results.

Step 9: Analysis

The analysis phase is the most important mixing moment, ultimately leading to evaluation results which comprehensively address evaluation needs. Discussing preliminary findings with key stakeholders is a very powerful way to validate findings and conclusions.

Mixing moment – Mixed-methods analysis is typically an iterative process. Qualitative data analysis starts at the time of field work to inform on-going data collection and ensure that all emerging themes are being explored. This is followed by further rounds with a preliminary description of both quantitative data and qualitative themes. The following step includes statistical inferential data analysis (incorporating the themes that emerged from the qualitative analysis) and synthesis of the qualitative data (incorporating themes that emerged from the quantitative analyses). Finally the resulting analyses need to be synthesized comprehensively along the lines of theory of change to understand which changes actually took place – and how, why and for whom

Step 10: Dissemination

For effective dissemination, evaluation findings need to be communicated in forms that enable potential users to readily understand and use them. It is also important to inform beneficiaries about the findings of the evaluation in a meaningful and appropriate way. Participatory approaches which engage stakeholders in the compilation of dissemination findings are especially useful for that, but evaluators also need to be mindful to keep their own objectivity in this process.

About the authors

Sandra Alba is an epidemiologist at KIT Royal Tropical Institute with a background in medical statistics. She has 15 years’ experience in the application of statistical and epidemiological methods to evaluate public health programmes primarily low- and middle-income countries.

Pam Baatsen is a cultural anthropologist at KIT with more than 25 years of experience in managing and evaluating large scale, complex health programmes and performing mixed methods research.

Lucie Blok is a medical doctor by training and health systems advisor at KIT, focusing over 30 years on health systems’ strengthening and improving programme effectiveness for tuberculosis, AIDS and other diseases through monitoring, evaluation and learning.

Jurrien Toonen is also a medical doctor by training with over 30 years’ experience in health systems’ financing, health insurance and performance-based financing. Over the years he has developed specific expertise in monitoring and evaluating these types of health interventions.

Competing Interests

We have read and understood the BMJ Group policy on declaration of interests and declare no conflicts of interest.