Since 2012, five collaborative efforts to quantify the benefits and harms of breast screening have been published. These are the UK Independent Review, the EUROSCREEN Working Group series (both 2012), the Swiss Medical Board report (2014), the updated IARC/WHO Handbook, and a report from the Research Council of Norway (both 2015). The approach to put together panel members to review the evidence, and the methods to do this, has varied considerably. So have estimates and recommendations. The Swiss report found that harms outweighed benefits and recommended clearly against the intervention, whereas the EUROSCREEN Working Group and the IARC/WHO panel found the opposite. The UK and Norwegian reports were somewhere in between, estimating that three and five women, respectively, are overdiagnosed for each woman who has her life extended. They recommended screening, but also recognized that the balance is delicate and the need for informed decisions.

Since 2012, five collaborative efforts to quantify the benefits and harms of breast screening have been published. These are the UK Independent Review, the EUROSCREEN Working Group series (both 2012), the Swiss Medical Board report (2014), the updated IARC/WHO Handbook, and a report from the Research Council of Norway (both 2015). The approach to put together panel members to review the evidence, and the methods to do this, has varied considerably. So have estimates and recommendations. The Swiss report found that harms outweighed benefits and recommended clearly against the intervention, whereas the EUROSCREEN Working Group and the IARC/WHO panel found the opposite. The UK and Norwegian reports were somewhere in between, estimating that three and five women, respectively, are overdiagnosed for each woman who has her life extended. They recommended screening, but also recognized that the balance is delicate and the need for informed decisions.

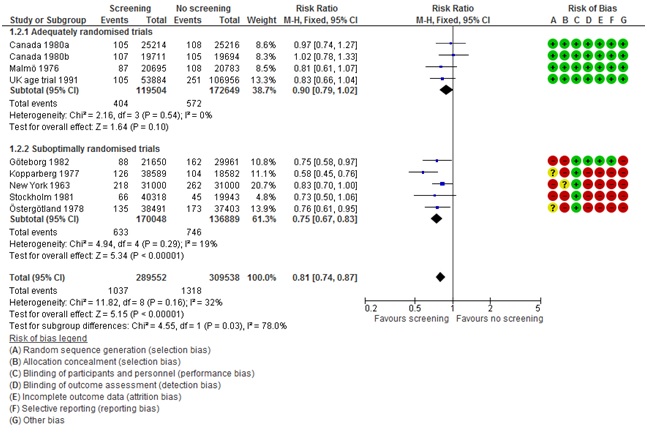

The reports spring from substantial scientific disagreement about breast screening. There have always been concerns, but the quantity and quality of scientific papers that has challenged the benefit and documented the harms have exploded since a Cochrane review of breast screening was published 14 years ago. Indeed, the two major conclusions from this review that sparked debate are still what largely determine the differences in recommendations. The first issue is how to interpret the results of the randomised trials. The Cochrane review considered the methodological quality of the trials and found that some were more reliable than others, mainly due to problems with the randomisation and the blinding of outcome assessment in some trials. The most reliable trials could not demonstrate a statistically significant benefit (relative risk 0.90, 95% CI 0.79 to 1.02), whereas the poor-quality trials could (relative risk 0.75, 95% CI 0.67 to 0.83), and the difference between the two groups of trials were statistically significant, i.e. the results were sensitive to methodological quality (I2=78%, P=0.03). This was criticized (fiercely) at the time of publication, but this approach has since become standard in Cochrane reviews, which now feature a Risk of Bias table next to the Forest-plot, so that the reader can judge whether there is a problem (figure 1). It is now also a standard approach in evidence-based guidelines. The Cochrane review also highlighted overdiagnosis as the major harm, which was so controversial that the Cochrane editors refused to publish it. Fortunately, the Lancet published the results. Overdiagnosis is broadly accepted today and the estimate from the Cochrane review was recognized in the reports from the UK, Switzerland, and Norway, although it is certainly a problem that the trials were not designed to quantify it. Alas, the harms of interventions are often not the main concern of trialists.

Only the Swiss report took the quality of the trials into account and it also included an analysis of total mortality in the trials (deaths from any cause), which showed that the randomisation did not always create comparable groups, as some trials showed a difference although none were powered to do this. The UK report excluded one trial, the Edinburgh trial, due to concerns about cluster-randomisation, but it is a major criticism that the UK report did not apply standard methods to assess trial quality. The recent IARC/WHO-report appears to have considered all trials as equally reliable, including the Edinburgh trial. Their reasons for doing this are obscure, as the full handbook is not published, and may not be for a year. It is a shortcoming that a summary was published, with strong conclusions in the media by key group members, when the full handbook is not available for peer scrutiny. It is also a shortcoming that the summary does not present benefits and harms in a comparable way.

While the panels for the UK and Swiss report consisted of recognized experts in fields not directly associated with, but relevant for, breast screening, the panel members of the WHO/IARC report were primarily chosen among researchers of breast screening. However, the process of selection is not clear, and key members are predominantly from one side of the scientific controversy. One member has already criticized the report. All the “Big Five” journals have published papers that have raised substantial doubts about whether breast screening does more harm than good, but none of the authors were panel members. The WHO/IARC claimed to have chosen members so that the panel was balanced and without conflicts of interest. But several key members have advocated strongly for breast screening for decades, are employed in patient organisations that have advocated and lobbied for breast screening, or are heads of national screening programmes. The absence of political and intellectual conflicts of interests seems difficult to justify. There is also substantial overlap between key IARC/WHO Panel members and the EUROSCREEN Working Group. It is therefore not surprising that estimates and conclusions also overlap. This becomes a particular problem when the observational studies are evaluated. Great emphasis is placed on modelling and case-control studies, although they are unreliable for interventions with uncertain effects, such as screening. Interestingly, the studies that the IARC/WHO and EUROSCREEN Working Group found were most reliable had panel members as authors. Both the selection of panel members, and the public announcement of strong pro-screening messages in a field of substantial scientific controversy without access to the analyses behind them, are a serious threat to the credibility and authority of the WHO and IARC.

Essentially, the reports are attempts to produce clinical guidelines for a medical intervention. These should be evidence-based, and there are procedures for doing this that are becoming standard. Breast screening does not have any special feature to make it exempt from this requirement. This includes consideration of the methodological quality of the trials using Cochrane’s risk of bias tool, but also the GRADE -approach to assess the confidence in effect estimates and moving from evidence to recommendations. Using the risk of bias tool is fairly straight-forward—the risks are either there (e.g. cluster randomisation was used) or not (e.g. individual randomisation was used). Using sensitivity analyses is recommended when there is substantial variation between trial results (heterogeneity) because substantiating the likely reasons for the variation is advantageous. The GRADE handbook is quite clear about what to do next: “If study methods provide a compelling explanation for differences in results between studies, then authors should consider focusing on effect estimates from studies with a lower risk of bias” (pp.17). This is sound advice, not just in breast screening. In a situation where the benefit is uncertain and the harms substantial, also from false positive tests with associated psychological stress and biopsies, this would lead to a recommendation against the intervention, most likely a strong one.

These are my personal views after having studied breast cancer screening for the past 12 years, and having been involved in evidence-based guideline work for the past 3 years.

Figure 1. Forest plot and risk of bias table from the Cochrane review on breast screening.

Karsten Juhl Jørgensen is a senior researcher at the Nordic Cochrane Centre in Copenhagen, and a methods consultant for the Danish Health and Medicines Agency for their evidence-based, national clinical guidelines.

Competing interests: None declared.