Peer review seems to be researchers’ favourite whipping boy. Whenever two or three academics gather together, they tell each other horror stories of the journals, granting bodies and peer reviewers who have failed to recognise the latest great work. My perspective on this may be skewed by a couple of decades spent as an editor at selective journals—but there is serious work to suggest that the business of selecting and rewarding research is not working optimally.

Earlier this year, four luminaries of American biomedical research wrote about the systemic flaws in science in the USA, and highlighted peer review among them. The Nuffield Council on Bioethics conducted an enquiry into the culture of research in the United Kingdom, including a survey of many practising researchers in a number of disciplines. Like Alberts, Kirschner, Tilghman, and Varmus, the Nuffield report concludes that peer review is part of the problem in a system that now encourages “hyper-competitiveness” and favours numerical assessments of the quality of research.

But what is the evidence that peer review and selection by journals is failing? A study published this week by Kyle Siler, Kirby Lee, and Lisa Bero aims to “measure the effectiveness of scientific gatekeeping” by three selective medical journals—including The BMJ. Their methods section notes:

“To analyze the effectiveness of peer review, we compared the fates of accepted and rejected—but eventually published—manuscripts initially submitted to three leading medical journals in 2003 and 2004, all ranked in the top 10 journals in the Institute for Scientific Information Science Citation Index. These journals are Annals of Internal Medicine, British Medical Journal [sic], and The Lancet. In particular, we examined how many citations published articles eventually garnered, whether they were published in one of our three focal journals or rejected and eventually published in another journal.”

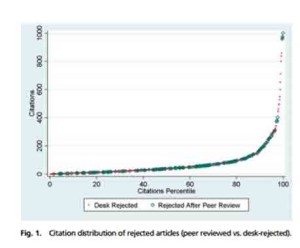

They note that citation is an imperfect measure of the quality of work—and indeed the Research section editors of The BMJ would note that they do not aim to select work for its likely future citations but, for example, for its potential impact on clinical practice, and the breadth of its interest. Nevertheless, all editors will surely take note of the finding by Siler et al, that 14 of the most highly cited papers in their sample of 757 (that were eventually published elsewhere) were rejected by a highly ranked medical journal. In 12 of these cases, the articles were “desk rejected”—by editors rather than peer reviewers—and Siler et al suggest that this means that scientific gatekeeping has a problem with the exceptional or unconventional.

Without delving deeply into the individual articles highlighted by Siler et al it is hard to be certain of the full meaning of their findings. But one point is made plain by their study: peer review is not the same as gatekeeping by the editors of journals. When peer review is blamed for all rejections from journals, the complainers are, in my view, conflating two processes. One is the technical assessment of a piece of work: has it been properly done, and are the conclusions adequately supported? This type of technical assessment must of necessity be done by peers—those in the specialist area who know the issues best. Another is the selection of the most interesting or important work, or the pieces that are most likely to appeal to a particular journal’s readership. This type of assessment is routinely made by editors—whether they are full time staff or academics working part time as editors. (In making this assessment, the editors often consult with the same peers whose expert advice they have asked on the technical issues, which muddies the distinction.)

We now have the technological wherewithal to evaluate individual articles in terms to the attention they receive after they are published, whether in terms of downloads, citations or social media attention. Some would argue that this will erode the role of selective journals and their contribution to the culture of research. Others note that in a world of increasing availability of information it becomes more important than ever to have reliable filters and highlighters of work likely to be of interest. The Nuffield report takes a welcome balanced view: that while we are likely to see an increasing role for postpublication commenting and assessment of the value of work, we are also likely to have prepublication peer review and selection for some time to come, and so we should work to make this operate as well as possible. Making peer review more open and transparent (as The BMJ has strived to do), and doing research on what works and what doesn’t (as Siler et al have done), can only help.

Theodora Bloom is executive editor of The BMJ.

Correction: When this blog was first published, Kyle Siler’s surname was incorrectly spelt as “Silera”. This was corrected on 8 January 2015. Apologies for this error.