Evidence generated from “real world” data (e.g. administrative claims and electronic health record databases), alongside clinical trials, is highly valuable for regulatory, coverage, and clinical decisions. While randomized clinical trials (RCTs) are considered a gold standard, they are typically conducted in highly restricted populations, limiting their generalizability. Furthermore, the expense of conducting trials limits their sample size and ability to detect rare, but serious adverse effects. In contrast, evidence generated from “real world” data reflects effectiveness and safety for large, representative patient populations treated as part of routine clinical practice. The importance of “real world” data in building the evidence base for the risk-benefit profile of drugs and other medical products is reflected in recent policies such as Adaptive Pathways in the European Union, the 21st Century Cures Act, and Prescription Drug User Fee Act (PDUFA) VI in the United States. Each of these policies has sections designed to advance use of “real world” evidence to support regulatory decisions. However, due to highly variable quality, decision makers are currently unsure how to use evidence from database studies.

Equipping decision-makers around the world with clear and transparent reporting of non-interventional database studies would enable effective screening for credible, high quality, and robust research to inform high stakes decisions. A change in the culture of database research conduct and reporting is both timely and necessary. In order to improve credibility of database research evidence, research reporting must be transparent enough that replicability of findings could be verified.

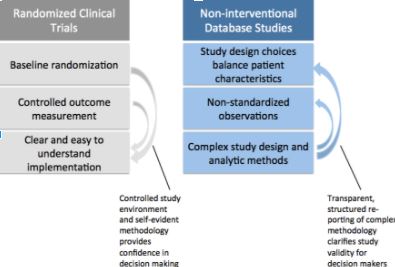

Although RCTs are rarely replicated, decision makers like RCTs because the methodology is easy for non-researchers to understand. Baseline randomization and planned outcome measurement reduce bias. Together this builds confidence in the findings. In non-interventional database studies, lack of randomization and quality of outcome measurement can be addressed with robust design and analytic methods. However, regulatory, clinical and health technology assessment decision makers need to be better equipped to discern whether evidence from a database study is valid and relevant to their question. In order to make that assessment, there must be greater clarity regarding study implementation. If all relevant details of a database study were reported in a transparent and structured way, this would allow expeditious assessment of database study quality, and facilitate independent replication of evidence. But what level of detail should be reported?

The ultimate measure of transparency is the ability to replicate findings by applying the same reported methods to the same data source. While code and data sharing should be encouraged whenever intellectual property and data licensing permit, important scientific decisions can be obfuscated in the thousands of lines of code necessary to create a study population from complex longitudinal healthcare data. Therefore structured reporting using clear, natural language description of these parameters is critical to facilitate fast and comprehensive understanding of studies so that decisions can be made in a timely manner with confidence.

The ultimate measure of transparency is the ability to replicate findings by applying the same reported methods to the same data source. While code and data sharing should be encouraged whenever intellectual property and data licensing permit, important scientific decisions can be obfuscated in the thousands of lines of code necessary to create a study population from complex longitudinal healthcare data. Therefore structured reporting using clear, natural language description of these parameters is critical to facilitate fast and comprehensive understanding of studies so that decisions can be made in a timely manner with confidence.

Transparency does not equal validity. Instead, it is an absolute prerequisite for validity assessment. Unless greater transparency is achieved, flawed database studies will continue to be lumped with rigorous, well designed database studies. This amplifies the reach of flawed evidence while decreasing the influence of robust database evidence. Recognizing the value of greater transparency, two leading professional societies that work intensely with healthcare databases, the International Society for Pharmacoepidemiology (ISPE) and the International Society for Pharmacoeconomics and Outcomes Research (ISPOR), created a joint task force that focused on transparency in the process of conducting such studies and transparency in reporting parameters that are required to make studies reproducible. [1,2]

The REPEAT Initiative, began a project designed to further improve clarity and transparency in reporting on database study implementation. REPEAT will produce empirically based recommendations that balance the detail necessary for transparency with the incentives for researchers to create and share structured technical reports. Firstly, REPEAT will measure the current level of transparency in reporting of specific operational and scientific decisions for a random sample of 250 database studies recently published in leading clinical and epidemiology journals. Frequency of issues such as including immortal time, adjusting for causal intermediates and other flawed design choices known to induce bias will be tabulated. Secondly, REPEAT will evaluate replicability of study populations and findings from sampled studies after applying the same reported methods in the same data sources. Finally, REPEAT will evaluate robustness of main findings to plausible alternative parameter choices, assumptions regarding confounding and misclassification, as well as positive and negative control outcomes. For studies with recognized biases in design or analysis, original findings will be compared to those after implementing more appropriate choices.

Shirley Wang is assistant professor of medicine at Harvard Medical School in the Division of Pharmacoepidemiology and Pharmacoeconomics of the Brigham and Women’s Hospital in Boston.

Shirley Wang is assistant professor of medicine at Harvard Medical School in the Division of Pharmacoepidemiology and Pharmacoeconomics of the Brigham and Women’s Hospital in Boston.

Sebastian Schneeweiss is professor of Medicine and Epidemiology at Harvard Medical School and Vice Chief of the Division of Pharmacoepidemiology and Pharmacoeconomics of the Brigham and Women’s Hospital in Boston.

Sebastian Schneeweiss is professor of Medicine and Epidemiology at Harvard Medical School and Vice Chief of the Division of Pharmacoepidemiology and Pharmacoeconomics of the Brigham and Women’s Hospital in Boston.

Competing interests: The REPEAT initiative is funded by the Laura and John Arnold Foundation.

This project is registered with ENCePP (EUPAS19636).

References:

- Wang SV, Schneeweiss S, Berger ML, et al. Reporting to Improve Reproducibility and Facilitate Validity Assessment for Healthcare Database Studies V1.0. Pharmacoepidemiology and drug safety. Sep 2017;26(9):1018-1032.

- Berger ML, Sox H, Willke RJ, et al. Good practices for real-world data studies of treatment and/or comparative effectiveness: Recommendations from the joint ISPOR-ISPE Special Task Force on real-world evidence in health care decision making. Pharmacoepidemiology and drug safety. Sep 2017;26(9):1033-1039.