How does the replicability crisis relate to the estimated 85% waste in medical research?

How does the “replicability crisis” relate to the estimated 85% waste in biomedical research? While both issues have gathered considerable attention, they are usually written about separately, as if they are separate problems. They are not: replicability and research waste are intimately intertwined. Poor research design and poor reporting confound attempts at replication, and non-publication can hide “negative” studies that contradict “positive” published studies.

How does the “replicability crisis” relate to the estimated 85% waste in biomedical research? While both issues have gathered considerable attention, they are usually written about separately, as if they are separate problems. They are not: replicability and research waste are intimately intertwined. Poor research design and poor reporting confound attempts at replication, and non-publication can hide “negative” studies that contradict “positive” published studies.

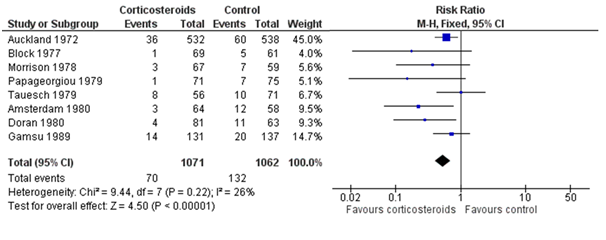

Replication in healthcare research is already common. But without systematic search and review, the replications can be overlooked. The figure below shows the results of eight studies, in chronological order, asking whether antenatal corticosteroids in preterm labour can reduce early neonatal death. The first study (Auckland) found a clear reduction in death; of the seven “replications,” only two (Amsterdam, Doran) found a statistically significant reduction, and hence “replicated” the statistical inference of the first study. However, the plot also makes clear that most studies were generally too small (wide confidence intervals) to reliably detect the reduction, but combining their results (the summary “diamond” marked as “Total”) strengthens the initial statistical inference.

Figure: Meta-analysis of trials before 1980 of corticosteroid therapy in premature labour and its effect on early neonatal death (From Cochrane.org)

The plot also shows some differences among studies in the estimated effects, which may be beyond chance variation. However, analysis of these differences is often hindered by poor reporting of methods and results, and by unpublished studies. Hence wasteful research practices hinder understanding of replicability. To understand this relationship between research waste and replication in more detail, let’s consider the four main stages of waste in research: (1) the research questions asked, (2) the avoidable flaws in study design, (3) completed but unpublished research, and (4) poor or unusable reports of research, with estimates of around 50% “wastage” in each of the last three stages (which leads to an estimate of over 85%). Each of these phases of research production and waste has an impact on replicability.

1) Inadequately informed questions

A necessary prerequisite for the design of worthwhile replication studies is to be aware of previous similar studies. Relying on informal knowledge, or even an unskilled search of the global literature, are poor guarantees for finding all previous similar studies: a more systematic review should be required before a replication study. If we examine the number of studies included in systematic reviews of studies ordered chronologically (such as in the figure), it is evident that some degree of replication is already quite common. Judgment is required to assess the point in cumulative reviews at which “necessary replication” becomes “wasteful duplication.” Nevertheless, the requirements for reducing waste in research and replication are similar: a systematic review to clarify what uncertainties exist, and a prioritisation process for additional primary research.

2) Avoidable flaws in design

A recent assessment suggested that more than 40% of clinical trials had major avoidable flaws. Replication of the exact study methods would merely replicate those flaws and, at best, confirm the biased results. Hence good replication should be preceded by a critical appraisal of the previous studies, preferably in systematic reviews of all previous similar studies. This would allow researchers to use the best methods and improve on design flaws before attempting replication. Again, reduction of waste in research and replication have a goal in common: to reduce avoidable design flaws that could result in misleading findings.

3) Unpublished studies

If we wish to replicate studies with so called “positive” results with a view to confirming their findings, a first step is to make sure there are no unpublished, failed attempts at replication. Unfortunately, unpublished “negative” studies are not rare—around 50% of clinical trials are not published within seven years of completion, and the unpublished trials are more likely to have “disappointing” results.

Hence a critical element of attempts at replication is to ensure that (1) all trials are registered at inception, and (2) their results are reported—at least within that registry, even if not published in a journal. Of course, this two step call by AllTrials needs to be extended to all types of studies—clinical and pre-clinical—if we are to avoid inadequately informed replication. Again, reduction of waste in research and replication have a goal in common.

4) Poor reporting

Complete and accurate descriptions of study populations, interventions, and research methods are required to design valid replications. If these conditions remain unfulfilled, we will remain uncertain as to whether any failure to replicate reflects differences in methods or differences in findings.

Unfortunately, a large proportion of studies have poorly described study populations and methods. It is helpful to distinguish three types of reproducibility: methods reproducibility, results reproducibility, and inferential reproducibility. The latter two—results and inferential reproducibility—were illustrated in the figure above: a forest plot of multiple studies on the same research question. Even identically conducted studies will never give exactly the same results (the squares in the figure), nor are they all likely to lead to the same statistical inference (based on statistical significance). However, to explore the sources of any results and inferential differences between studies requires replicability of methods, and doing that will depend on adequate reporting of methods of previous studies.

Attempts to design reliable replication studies are likely to be misleading unless they take proper account of these four ways in which the design of replicate studies can be compromised by problems reflecting sources of waste in research. This is why the current concerns about replication need to encompass efforts to reduce waste at all four stages.

Paul Glasziou is professor of evidence based medicine at Bond University and a part time general practitioner.

Competing interests: None declared.

Between 1978 and 2003, Iain Chalmers helped to establish the National Perinatal Epidemiology Unit and the Cochrane Collaboration. Since 2003 he has coordinated the James Lind Initiative’s contribution to the development of the James Lind Alliance, the James Lind Library, Testing Treatments interactive, and REWARD.

Competing interests: IC declares no competing interests other than his NIHR salary, which requires him to promote better research for better healthcare.

Acknowledgements: Our thanks to Anna Scott for preparing the figure.

References

- Research: increasing value, reducing waste, series at: thelancet.com/series/research

- Munafò MR, Nosek BA, Bishop DMV, et al.s A manifesto for reproducible science. Nature Human Behaviour 1, Article number: 0021 (2017). http://www.nature.com/articles/s41562-016-0021

- Glasziou P, Chalmers I. https://blogs.bmj.com/bmj/2015/10/29/how-systematic-reviews-can-reduce-waste-in-research/

- Clarke M, Brice A, Chalmers I. Accumulating research: a systematic account of how cumulative meta-analyses would have provided knowledge, improved health, reduced harm and saved resources. PLoS One 2014 9(7): e102670. doi:10.1371/journal.pone.0102670.

- Yordanov Y, Dechartres A, Porcher R, et al. Avoidable waste of research related to inadequate methods in clinical trials. BMJ 2015;350:h809. http://www.bmj.com/content/350/bmj.h809

- Ross JS, Tse T, Zarin DA, Xu H, Zhou L, Krumholz HM. Publication of NIH funded trials registered in ClinicalTrials.gov: cross sectional analysis. BMJ 2012;344:d7292. http://www.bmj.com/content/344/bmj.d7292

- Goodman SN1, Fanelli D2, Ioannidis JP2. What does research reproducibility mean? Sci Transl Med. 2016 Jun 1;8(341):341ps12. doi:10.1126/scitranslmed.aaf5027.