By Cati Brown-Johnson, Sonia Rose Harris & Lisa Goldthwaite

In the February issue of the Journal, Hofmeyr and colleagues describe a postpartum family planning quality improvement initiative in a large public hospital in Botswana. Their initiative aimed to improve postpartum contraceptive counseling and provision, particularly efforts to integrate intrauterine device services into clinical practice while also monitoring patient experience. Their efforts take into account that even with best practice recommendations, moving from evidence to practice must be shaped by local contexts and reflect real-life experiences.

In our editorial, we praise the authors for reporting this study, especially given the focus on quality improvement and implementation, which can advance the growing field of implementation science. While quality improvement is relatively straightforward—improving quality of care – implementation science goes a step further. Implementation science is the science represented by generalizable knowledge, principles, frameworks, theories and tools of implementing interventions in real-world contexts. Implementation science, like quality improvement, is all about the how. How is this intervention best delivered locally?

While Hofmeyr et al only mention ‘implementation’ a handful of times within their article, we see principles throughout, which calls into question—what benefit do we, as researchers and clinicians, get if we call this quality improvement or implementation science? What is the advantage of using frameworks and theories outlined in implementation science?

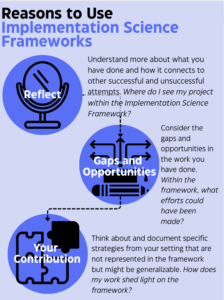

Here’s what we propose in our editorial:

- Reflect: Understand what you have done and how it connects to other successful and unsuccessful attempts.

- Gaps and Opportunities: Consider the gaps and opportunities in the work you have done. By using an implementation framework, you can see opportunities that are currently not reported on—for example patient involvement.

- Your Contribution: Think about specifics from your setting not represented in the framework, this could be your contribution to an evolution of the framework.

Another success we note—Hofmeyr et al include patient satisfaction as an aspect of their program analysis since talking to people can help ensure that changes are well-suited for the local context. Patient input can be gathered at many points in improvement work, including program evaluation as we see in this example. We encourage those doing improvement work to consider also inviting patient representatives onto project teams early in project development, in order to incorporate their constructive insights from the beginning, thus centering patient voices throughout design and implementation.

From our perspective, whether we call it quality improvement, implementation science–or something else—whether patient perspectives are included at the beginning or in the final evaluation – ultimately what matters is that we’re improving quality of care and communicating to each other about those efforts.

So forget the labels! At the end of the day, we just want to read about your efforts to improve care.

Read the paper from Hofmeyr and colleagues here.

Read the editorial here.

—

About the authors

Cati Brown Johnson PhD is a founding member of the Stanford Evaluation Sciences Unit. In her role as Research Scientist, she developed the Stanford Lightning Report, a rapid assessment tool rooted in implementation science principles. Over the past 10 years, she has focused on healthcare delivery, prevention medicine innovation, and scientific direction for health equity research. Her approach encompasses methods from qualitative research, quality improvement, behavior change, and linguistics, including Lean management, the Quintuple Aim of healthcare, and design thinking/user experience. Dr. Brown-Johnson believes in the value of Learning Healthcare Systems and is deeply committed to continuous learning and maintaining a curious mindset. She is committed to identifying what works and what requires improvement to enhance systems and provide patient-centered care.

Dr. Lisa Goldthwaite is a board-certified Obstetrician Gynecologist and Complex Family Planning subspecialist. She completed medical school and residency at Oregon Health & Science University. She subsequently completed a Fellowship in Complex Family Planning at the University of Colorado, while concurrently obtaining a Master of Public Health from the Colorado School of Public Health. From 2015-2022 she was a Clinical Assistant Professor at Stanford University School of Medicine, where she also served as a consultant through the Stanford Program for International Reproductive Education and Services (SPIRES) to provide medical education and clinical family planning quality assurance services internationally. Dr. Goldthwaite currently lives and works as a Gynecologist in Minneapolis, Minnesota and serves as the Immediate Postpartum Contraceptive Care Clinical Advisor for Upstream USA. Her professional interests include patient centered reproductive health care, immediate postpartum contraception, medical education, and health care quality improvement.

Sonia Rose Harris, MPH is a qualitative research and project manager with Stanford University’s Evaluation Sciences Unit. Sonia earned her bachelor’s degree in social policy and master’s in public health from Northwestern University where she focused on child and adolescent injury and violence prevention. Upon graduation, Sonia worked for multiple non-profits as a sexual health and sexual assault prevention educator. Her professional interests include violence prevention, trauma-informed care, and mental health quality improvement.