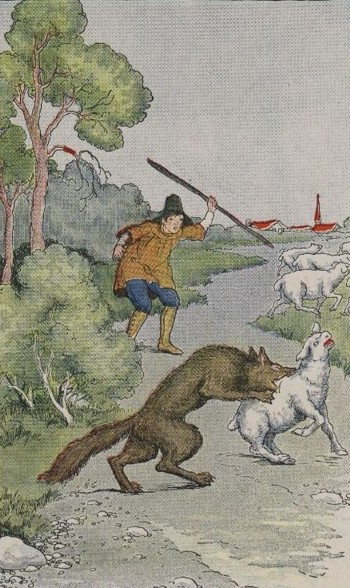

The fable of the bored shepherd boy, alone on a hillside (except for the sheep – sheep don’t count as company*) waiting for something to happen, is one that I hope most of us know and can recount if needed at any dinner table.

The fable of the bored shepherd boy, alone on a hillside (except for the sheep – sheep don’t count as company*) waiting for something to happen, is one that I hope most of us know and can recount if needed at any dinner table.

In my folk-recollections of the tale, it’s three times that the lad calls “Wolf!”. Twice he gets the thrill of a village-load of yokels pounding out to his hillside vantage point, and it’s the third – truthful – one that everyone ignores and the sheep get gobbled up.

How many times can we call “Wolf!” on a ward and get away with it? Or – how good does a PEWS need to be before we’ll think it’s useful?

The shepherd’s actually pretty damned good. A 33% hit rate would be considered remarkable by most folk wanting an alert about a life-threatening condition. What would be the acceptable lower limit though?

Is 25% good enough

– 20%?

— 10%?

—- 5%?

—— 2%?

There’s a recent paper that evaluates the NHS-PEWSIII in a cohort of patients in Wales and gets a 2% positive predictive value. Combine this with the recent evidence summary on PEWS-like systems and you’re in a bit of a dilemma – if it seems to work but is terribly tiring, how can it really work in real wards?

(There’s a similar issue with referrals with lymphadenopathy in particular for suspected lymphoma, with the “two week wait” systems being shown repeatedly [1 2 3] to have a very low positive predictive value. In this instance, the NICE guideline states explicitly than in adults it’s working with a 2% threshold and that in children it should be considerably lower.)

What is the right way to think about induced complacency vs. the value of an early warning system? Is the issue just because of scarse resource, or something else?

How many times can we call “Wolf!”

- Archi

* Except in Yorkshire, North Wales, and New Zealand, obviously.